Paper on a Near-SRAM Computing Architecture for Edge AI Applications accepted at ISQED 2025

We're excited to share our paper, "Keep All in Memory with Maxwell: a Near-SRAM Computing Architecture for Edge AI Applications" is accepted at ISQED 2025!

Recent advances in machine learning have dramatically increased model size and computational requirements, increasingly straining computing system capabilities. This tension is particularly acute for resource-constrained edge scenarios, for which careful hardware acceleration of computing-intensive patterns and the optimization of data reuse to limit costly data transfers are key.

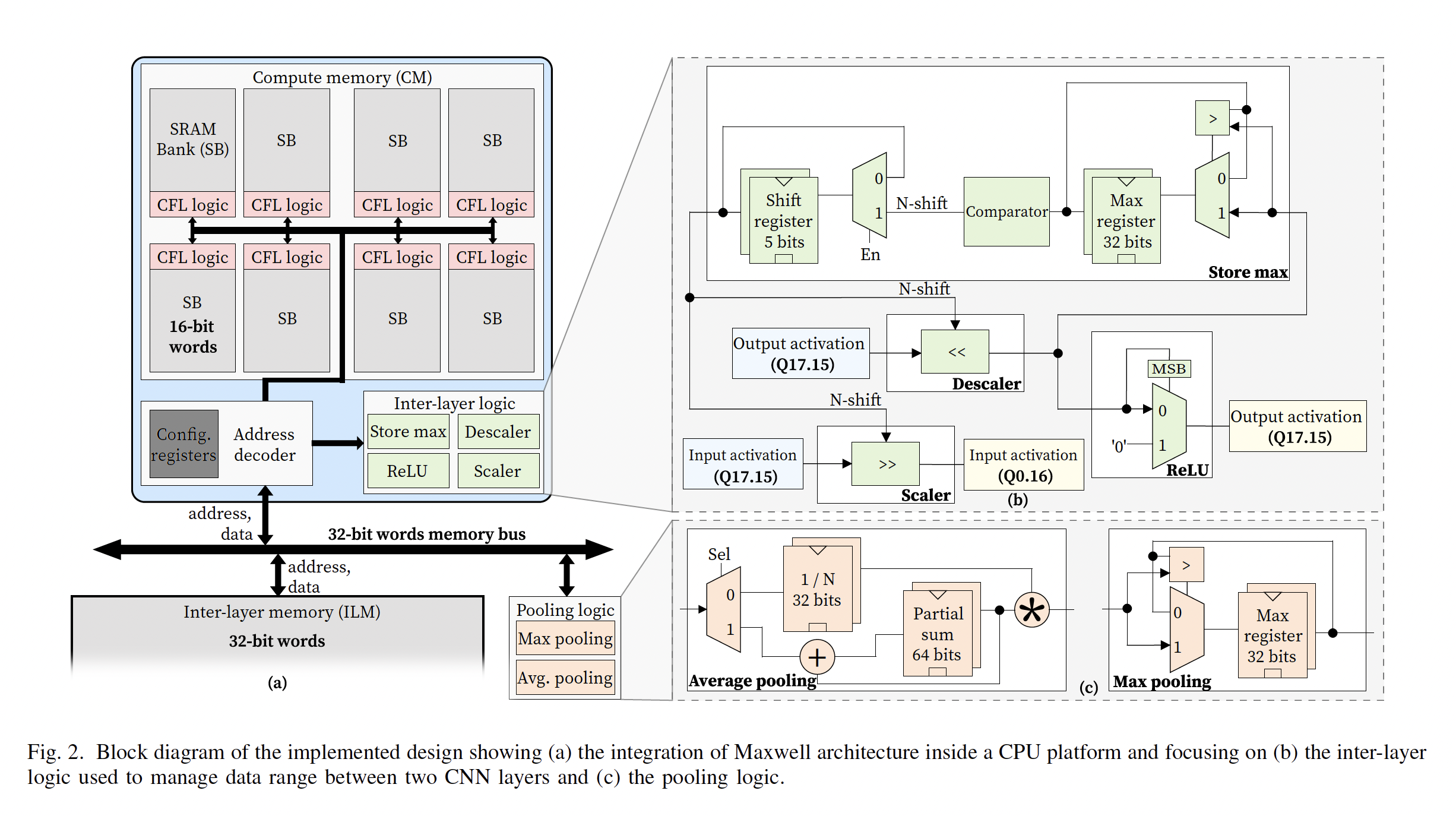

Addressing these challenges, we herein present a novel compute-memory architecture named Maxwell, which supports the execution of entire inference algorithms nearmemory. Leveraging the regular structure of memory arrays, Maxwell achieves a high degree of parallelization for both convolutional (CONV) and fully connected (FC) layers, while supporting fine-grained quantization. Additionally, the architecture effectively minimizes data movements by performing near-memory all intermediate computations, such as scaling, quantization, activation functions, and pooling layers.

We demonstrate that such an approach leads to up to 8.5x speed-ups, with respect to state-of-the-art near-memory architectures that require the transfer of data at the boundaries of CONV and/or FC layers. Accelerations of up to 250x with respect to software execution are observed on an edge platform that integrates Maxwell logic and a 32-bit RISC-V core, with Maxwell-specific components only accounting for 10.6% of the memory area.

Gregoire A. Eggermann, Giovanni Ansaloni, and David Atienza, "Keep All in Memory with Maxwell: a Near-SRAM Computing Architecture for Edge AI Applications”, accepted at ISQED 2025 [Download Preprint (PDF, 1.4 MB)]